Artificial intelligence is increasingly embedded within everyday devices. Smartphones, IoT gadgets, and vehicles now run AI models directly on the...

Skyld

Transform your AI models into safe and valuable assets.

Our Solutions

Prevent Copy and Reuse of Your

Proprietary Algorithms

AI Protection

Secure your on-device AI models against reverse engineering with a low computing footprint. Protect your competitive edge and technological advance.

AI Licensing

Deploy, manage, and monetize your AI models with confidence. Protect your intellectual property and supercharge profits.

Adverscan

Harden your AI against adversarial examples. Even high-performing AI can be deceived. Test your resilience in the lab, before your model gets deployed.

Proudly Trusted By

Awards

Marie Paindavoine wins the first #WomenInTech award, celebrating women entrepreneurs shaping the future of tech in Europe, organized by Wavestone and Les Echos.

Skyld won the Access-2-Finance Award during European Cyber Week 2023, recognizing us as one of the top cybersecurity startups in Europe.

Marie Paindavoine, founder and CEO, won the European Cyber Woman Award in the Entrepreneur category, recognizing her impact in AI and cybersecurity.

We were honored to be among the 12 most promising cybersecurity startups in Europe, reaching the finals of the ECSO Startup Award 2024.

Blog, research, and product releases

Latest blog posts

Read More

Adversarial Patches in the Wild

What if you wanted to make a self-driving car stop in the middle of the highway? Sounds impossible? Let’s see....

Read More

How to Build an Adversarial Patch?

Adversarial Patches are increasingly common attacks on AI models. But how easy are they to set up? In this article,...

Read More

How to Build an Adversarial Example?

Adversarial Examples are increasingly common attacks on AI models. But how easy are they to set up? In this article,...

Read More

How AI Models can be Fooled: Adversarial Examples?

Did you know that a simple sticker on a STOP sign is sufficient to fool a self-driving car? Why? Because...

Read More

How to Accurately Measure VRAM Usage

This article provides a practical guide on how to accurately measure VRAM usage in Python for NVIDIA GPU. It starts...

Read More

Google Photos' AI Models: The Secret Sauce That Can...

Google Photos is one of the most widely-used photo management applications globally, pre-installed on almost every Android device running Google...

Read More

New Deployments, New Threats: How To Protect Local AI...

As deep learning (DL) models become integral to application functionality, protecting them is more important than ever. In this article,...

Read More

Attack On AI Models: What You Need to Know!...

Artificial Intelligence (AI) powers a wide range of modern technologies — from autonomous vehicles to facial recognition systems. Every AI...

Read More

AI Learning Types : Supervised, Unsupervised & Reinforcement

Artificial Intelligence (AI) and Machine Learning (ML) have transformed the way we interact with technology—powering features from voice assistants to...

Read More

How Do You Reverse-Engineer an Android App?

The world of Android applications is constantly evolving, offering a myriad of features to users worldwide. However, this diversity and...

Read More

What Are the Applications of On-Device Machine Learning?

AI models are everywhere—from unlocking your phone to powering medical diagnostics. But few realize how exposed these models become once...

Read More

Artificial Intelligence Model Extraction

Machine learning models are the results of highly complex computations and optimization over a massive amount of data. Data is...

Read More

Model Inversion Attacks in Machine Learning: Are Your AI...

Model inversion attacks pose a real threat to machine learning models trained on sensitive data — from industrial secrets to...

Read More

Protect Your On-Device Artificial Intelligence Algorithms: Encryption Is Not...

On-Device Artificial Intelligence (AI) is an invaluable asset to many industries, offering revolutionary capabilities in analysis and prediction. But with...

Read More

Expert Series: Understanding and Defending Against Adversarial Attacks on...

This post introduces the research of Thibault Maho, a Ph.D. student working on the security of neural networks. His work...

Read More

Edge AI: Benefits, Applications and Risks

Edge AI combines artificial intelligence with edge computing to enable fast, private, and efficient decision-making right on devices like smartphones,...

Our News

Here Are Our Latest News

Read More

Skyld, French Deeptech Startup, Raises €1.5 Million to Secure...

We are pleased to announce our first round of fundraising. The round was backed by French and Swiss investors: Auriga...

Read More

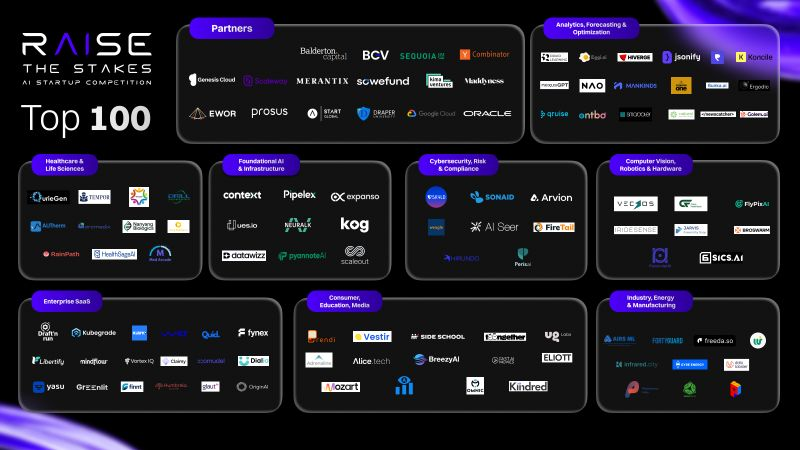

Innovation and Recognition: Skyld among the 100 most promising...

We are proud to announce that Skyld has been selected as one of the 100 most promising AI startups of...

Read More

TV Show – AI and Cybersecurity: New Threats, New...

On April 2nd, we had the pleasure of being invited by Thales to take part in a special live TV...

Read More

VivaTech 2025: Go Behind the Scenes of Our Participation!...

From June 11 to 14, 2025, Paris Expo Porte de Versailles opened its doors again for the biggest innovation event...

Read More

Skyld Among the Têtes 2025: A Bold and Inspiring...

We are delighted to announce that Skyld has been recognized as one of the most innovative and inspiring companies in...

Read More

Season 7 Of The Women Entrepreneurs Program by Orange...

March 2025 marked the start of Season 7 of the Women Entrepreneurs Program. Led by Orange, this program supports women-led...

Read More

Skyld joins the Female Founders Fellowship at STATION F...

We are proud to announce that Skyld is now part of the Female Founders Fellowship at STATION F, the world’s...

Read More

Skyld Wins the #WomenInTech Award: A Big Step for...

On March 6, Marie Paindavoine, founder of Skyld, won the very first #WomenInTech award, organized by Wavestone and Les Echos....

Read More

Marie Paindavoine Has Been Selected As One Of The...

Marie Paindavoine, CEO of Skyld, is included in the list of the 100 innovators of 2025 by Le Point. This...

Read More

Skyld on the Red Carpet: Review of WAICF 2025...

From February 13 to 15, 2025, the Palais des Festivals et des Congrès in Cannes hosted the 4th edition of...

Read More

Skyld at AI Business Day: The Summit for Action...

The AI Business Day, part of the AI Action Summit organized under the Élysée’s patronage, has become the must-attend event...

Read More

Marie Paindavoine Joins Women TechEU Program: A Recognition For...

2025 is off to a great start for Skyld! We are excited to announce that Marie Paindavoine, our CEO, is...

Read More

3rd Edition of the Winter Research School by CyberSchool...

On February 6, 2025, Skyld took part in the 3rd edition of the École d’Hiver Recherche. The event was organized...

Read More

13 French Startups to Watch And Skyld Is On...

Skyld is featured in the prestigious list of “13 French startups to watch outside of Paris, according to VCs” by...

Read More

In The Spotlight: Marie Paindavoine Wins The Cyber Woman...

On December 10th in Paris, women in cybersecurity were celebrated!

Read More

Imagine Summit: A Day Focused on Innovation

The 9th Imagine Summit just came to a close! Organized by Le Poool and La French Tech Rennes St-Malo, in...

Read More

GenerationAI: A Vision of AI Beyond Algorithms

On December 3rd, our CEO Marie Paindavoine was at GenerationAI Paris 2024, giving a presentation on “Model Extraction: Why You...

Read More

Skyld Joins The New Season Of Thales Cyber@Station F...

The Thales Cyber@Station F acceleration program is a great opportunity for Sklyd to benefit from Thales’ expertise and ecosystem.

Read More

We're Now Part Of The NVIDIA's Inception Program For...

We are excited to announce that we have been accepted into NVIDIA’s Inception Program, which supports start-ups in AI and...

Read More

Skyld At VivaTech 2024

VivaTech opened its doors on Wednesday, May 22, 2024, and ran until May 25. This 8th edition brought together technology...

Read More

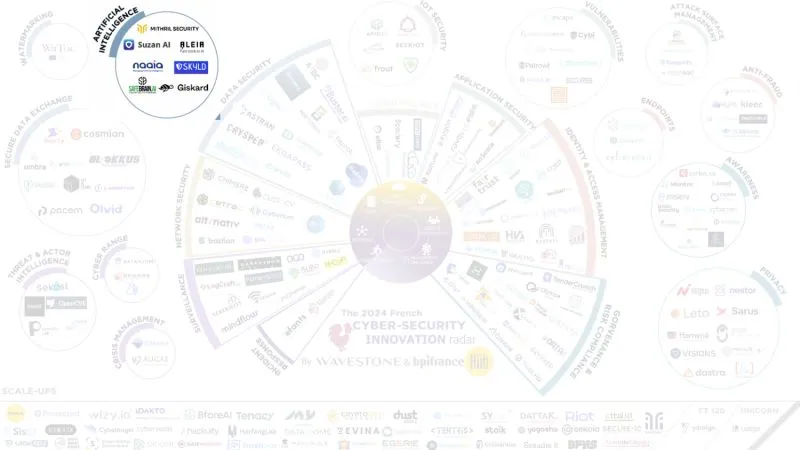

On Wavestone's Radar!

Skyld is proud to be listed on the Wavestone radar of French cybersecurity start-ups 2024 in the Artificial Intelligence category....

Read More

Skyld, The Nominated Startup, Wins The Audience Award At...

The Halle de la Brasserie in Rennes was the stage for an event, the Creators' Morning, bringing together nearly 250...

Read More

Skyld At The ECSO Startup Award Finals Which Took...

What an exciting adventure Skyld embarked on by participating in the finals of the European Cyber Security Organisation (ECSO) Startup...

Read More

Skyld In The Spotlight for The 38th Edition Of...

Every year, this prestigious event highlights exceptional businesses, emphasizing their achievements in crucial areas such as societal engagement, innovation, responsible...

Read More

Flashback To Our Participation At WAICF At The Palais...

A few weeks ago, the Skyld team had the exceptional opportunity to participate in the World Artificial Intelligence Cannes Festival...