Google Photos' AI Models: The Secret Sauce That Can Be Stolen

Google Photos is one of the most widely-used photo management applications globally, pre-installed on almost every Android device running Google Mobile Services (GMS). It is appreciated by users because it offers powerful features like “Magic Eraser” and advanced AI-powered photo editing tools. Of course, Google doesn’t open-source its AI models to keep its competitive edge. At Skyld, we revealed that malicious actors could steal the application’s deep learning models for their own gain—potentially even using Google’s proprietary AI features outside the confines of the application itself. In this study, we examined AI model extraction in Google Photos, particularly focusing on its behavior and protection mechanisms within the Android environment.

The Technical Breakdown

The Discovery

During our analysis of the Google Photos Android application, we discovered that TensorFlow Lite encrypted models—a lightweight version of Google’s machine learning framework—are embedded within the app. Each AI-powered feature in Google Photos, such as Magic Eraser, Photo Unblur, Portrait light and Magic Editor, relies on a specific subset of the discovered models to operate.

Where Are These Models Hiding?

To identify which machine learning library the application was using, we first searched for common libraries such as TensorFlow, PyTorch, or ONNXRuntime within the application’s library files. Upon analyzing the Google Photos Android application, we discovered that it is relying on TensorFlow Lite (TFLite). This made sense given that TFLite is optimized for mobile devices and is developped by Google. By identifying the use of TFLite, we could then narrow our efforts to locating and extracting the machine learning models embedded within the application, leveraging reverse engineering techniques to uncover these critical components.

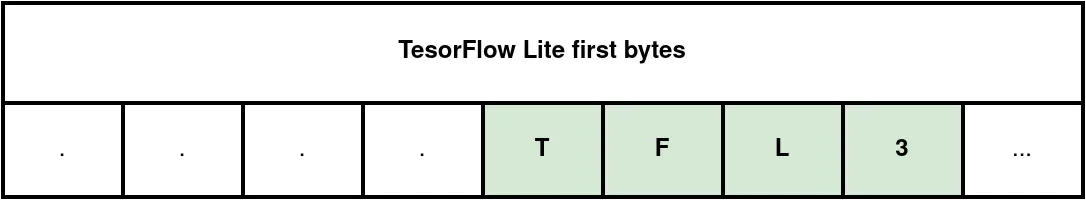

TFLite defines a specialized file format to store machine learning models efficiently, particularly suited for mobile and embedded devices. Within this file, flatbuffers are employed to serialize model data, allowing for efficient storage and rapid access during inference for both architecture and parameters. TFLite employs the flatbuffers file identifier TFL3, which is located at a 4-byte offset from the beginning of the file, as represented on Figure 1. Thus, we used this magic number to find TFLite files in the application.

Figure 1: File identifier in a TensorFlow Lite model file.

Models Stored Unencrypted

We initially identified two machine learning models within the application’s assets during our analysis. These models appeared to be stored directly in the APK file without any form of additional protection. It led us to assume they may not hold significant value for Google as intellectual property. This assumption was further reinforced by the protection used for other models, which will be described in section Models Stored Encrypted.

By leveraging the TFL3 magic number, we identified the presence of TensorFlow Lite (TFLite) models directly within the compiled native library libnative.so. The magic number served as a reliable marker to locate the start of these TFLite files. To determine the end of each model file, we needed to parse the flatbuffers structure used by TFLite. Given that TensorFlow is open-source, we used the schema tensorflow/compiler/mlir/lite/schema/schema_v3c.fbs to understand and navigate the flatbuffers format. This allowed us to accurately identify the boundaries of each model within the binary data. Through this approach, we successfully extracted the complete TFLite models from libnative.so.

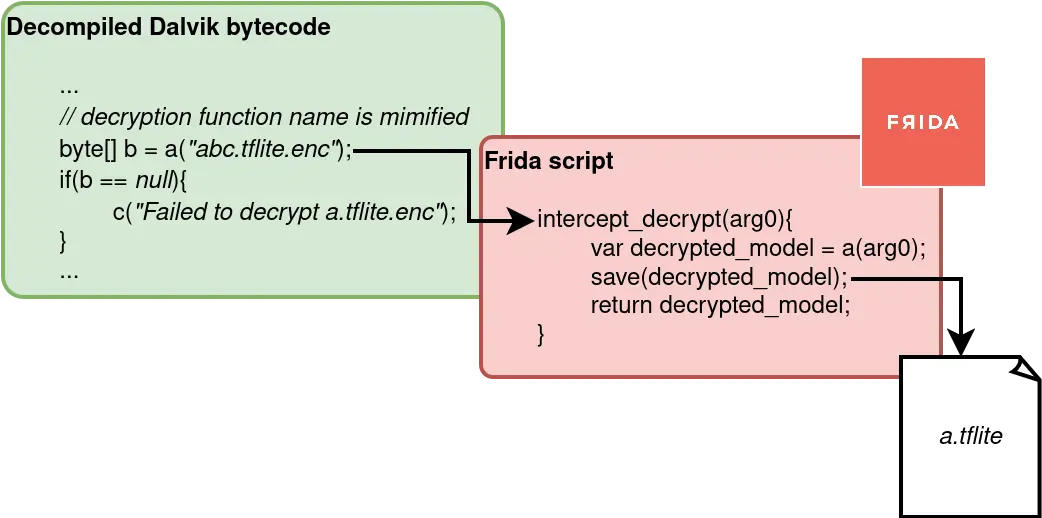

Models Stored Encrypted

In our investigation, we discovered string logs within the decompiled Dalvik bytecode that revealed encrypted files named “file_name.tflite.enc” being decrypted during runtime. This insight encouraged us to employ a dynamic approach using Frida to intercept and capture these decrypted TFLite models at runtime. By attaching our custom Frida script to the running Google Photos process, we were able to monitor and extract the decrypted file as it was loaded into memory, effectively bypassing static protections and demonstrating how dynamic attack techniques can be leveraged to uncover sensitive AI assets embedded within applications.

Figure 2: Frida instrumentation of the model decryption function.

In our analysis of Google Photos’ model file decryption process, we discovered that the application uses the Android Keystore system to securely manage cryptographic keys. The Keystore stores these keys in a Trust Execution Environment (TEE), providing an additional layer of security by ensuring that they are inaccessible even by unauthorized parties. However, while this approach is robust at rest, it has a critical weakness: the decryption process must occur at runtime for the model files to be executed. During this moment of decryption, there exists a window where the decrypted file can be intercepted. By employing Frida, we were able to hook into this decryption step, successfully capturing the decrypted model file. This demonstrates that despite the protection offered by the Android Keystore, the necessity to decrypt models for execution presents an exploitable gap. Figure 2 illustrates how Frida’s hook mechanism can be applied in this context, providing a visualization of the interception process.

Findings Summary

In conclusion, the models were found in four distinct locations within the application:

- Downloaded and Decrypted Models: 20 models intercepted as being decrypted using the Android Keystore.

-

Within

libnative.so: 17 models embedded directly into the application’s compiled code. - Application Assets: 2 models found in the asset files of the application.

- Application Files: 1 model stored in specific folders within the app.

Exploring AI Model Applications

Among the 20 models identified, we analyzed the specific tasks they perform as well as common architectural patterns. Below is an overview of the successfully recovered models:

- Face detection, image quality assessment, age estimation, and personal characteristic prediction using a SCNet-based multi-label classification model.

- Small, medium, and large object detection, with two multi-scale detector models, including one specialized for pet face detection.

- Portrait segmentation using a MobileNet-based model.

- Semantic segmentation with two models leveraging a MobileNetV2 backbone.

- Portrait video segmentation with a dedicated model for video processing.

- Depth estimation using a SwissNet-based model.

- Foreground/background segmentation with a MobileNet backbone.

- Sky segmentation using a ResNetV2-based model.

- Video shadow segmentation with a model specifically designed for videos.

- Blur detection using a MobileNetV2-based classifier to identify blurred images (used for a downstream task).

All these models are directly deployable. Figures 3 and 4 illustrate the results obtained with the depth estimation and portrait segmentation models. The leftmost images represent the input, while the rightmost images display the model’s output.

Figure 3: Example of a portrait segmentation model in action. The input image is on the left, and the model's output is on the right.

Figure 4: Illustration of a depth estimation model usage. The input image is on the left, and the model's output is on the right.

The Implications

Competitor Exploitation

A competitor could potentially extract these models, saving them the time and resources required to develop similar AI capabilities from scratch. This not only provides a shortcut to replicating Google’s advanced features but also eliminates the need for extensive data collection and training. This potential enables competitors to replicate Google Photos’ features, including its “Magic Eraser” tool, bypassing the need for independent development.

Adversarial Attacks

The extraction of these models opens the door to more sophisticated attacks, such as the creation of adversarial examples. These are inputs designed to mislead AI models, potentially bypassing security measures or manipulating application functionality in unintended ways.

The Future of AI Security

As AI becomes more integral to everyday applications, the need for robust protection mechanisms becomes increasingly critical. This discovery underscores the importance of safeguarding intellectual property and ensuring that proprietary AI models cannot be easily extracted and exploited by malicious actors.

How Skyld Can Help

At Skyld, we recognize the potential risks associated with exposed AI models and are committed to providing solutions to protect against such weaknesses. Our product offers comprehensive protection for AI models both at rest and during runtime, ensuring that your intellectual property remains secure and cannot be exploited by competitors or malicious actors.

By leveraging Skyld’s advanced security measures, leading actors can safeguard their AI assets and maintain a competitive edge in an ever-evolving technological landscape.

Google disclosure timeline

- Dec 23, 2024 - Reported

- Dec 23, 2024 - Accepted

- Feb 6, 2025 - Reward Program decision

- Feb 28, 2025 - Submission of this article