Edge AI: Benefits, Applications and Risks

Edge AI combines artificial intelligence with edge computing to enable fast, private, and efficient decision-making right on devices like smartphones, sensors, and cameras — without relying on the cloud. In this post, we explore how Edge AI works, where it’s being used, the benefits it brings, and the risks companies need to watch out for.

This post answers:

- What is Edge AI, and how is it different from cloud AI?

- What are the main benefits of Edge AI?

- Where is Edge AI already being used?

- What are the key risks of deploying AI at the edge?

What is Edge AI?

Edge AI is the fusion of two technologies: edge computing and artificial intelligence.

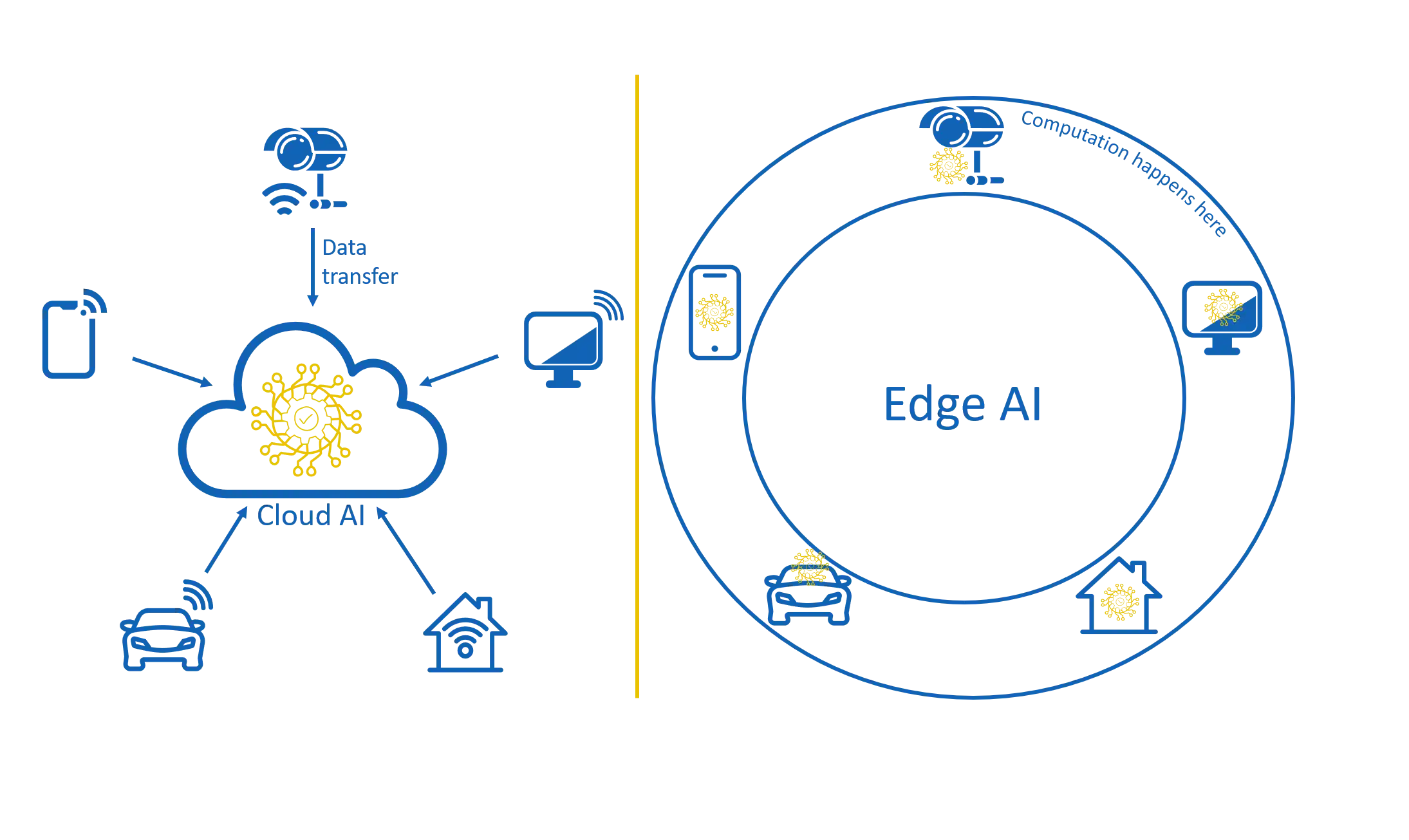

According to Gartner, the edge refers to the physical location where things and people connect with the digital world. The edge is the source of data, and with edge computing, data is created and processed directly on the device, whether that’s a phone, a car, or a factory sensor. Edge computing is opposed to traditional cloud computing, where data is sent to a central server to be processed.

Edge AI refers to artificial intelligence algorithms that run on edge devices. Instead of sending data to the cloud for analysis, devices perform AI inference locally. (Inference is the stage where an AI model makes predictions — and it requires far less power than training.)

This means that smart devices can make decisions instantly, without waiting for a round trip to the cloud — and without sending sensitive user data off-device.

Cloud AI versus Edge AI

Cloud AI versus Edge AI

Benefits of Edge AI

- Data privacy: The data is processed where it is produced, reducing the surface attack and the leakage probability. This is a major advantage in the healthcare and quantified self industries, which rely heavily on private data. By processing data locally, the risk of large-scale breaches is reduced, and edge AI systems often delete data after use to improve privacy.

- Reduced latency: Transmitting data takes time, especially if it is a video or an audio stream. Edge AI brings high-performance computing at the level of the device, allowing real-time analysis. It enables a seamless experience in the video game industry and is critical in time-sensitive environments like autonomous driving, where delays can be dangerous.

- Reduced costs: Using edge AI helps reduce costs related to backend maintenance, cloud storage, and bandwidth.

- Reliability: Edge devices continue to operate even without internet access. This is crucial in transportation applications, where connectivity isn’t guaranteed.

- Reduced power: Processing locally reduces data transmission, resulting in lower energy consumption.

Edge AI use-cases and industry examples

- Industrial IoT: Edge AI can take automation on the assembly line to the next level, enabling reliable, round-the-clock operations. For example, using video analytics, AI can perform visual inspections of products to detect defects.

- Autonomous Vehicles: In autonomous vehicles, real-time analysis is critical. Whether it’s to adjust the car’s behavior at an intersection or respond to unexpected events, decisions must be made in a matter of seconds. In addition, network coverage is often unreliable.

- Healthcare: Edge AI has numerous applications in healthcare. For example, it can be used to diagnose or monitor patients in real time. Privacy is paramount when handling health data and minimizing centralization helps reduce the risk of large-scale data breaches.

- Smart Homes: Smart homes only rely on IoT devices that collect and process data from sensors throughout the house. Using edge AI instead of a centralized server guarantees privacy and reduces costs related to cloud computing and network usage.

Specific risks of Edge AI

- Data loss: Data privacy is a great asset for edge AI, but data is usually discarded after processing. This data, however, could be useful to fine-tune models. That is what federated learning is for: an intricate technology that allows training at the edge.

-

IoT security is brittle: A distributed infrastructure is harder to secure. Each device is a potential attack surface. You’ll need to provide additional security measures to protect your applications:

- Implement proactive threat detection technologies to identify suspicious behavior as early as possible.

- Enforce automatic patch cycles over the devices.

We can help you implement these protections, feel free to contact us.

- Intellectual property: If an attacker gains access to your application layer, they can reverse engineer your model and replicate your product for a fraction of the initial cost. Think of all the time invested in acquiring, cleaning, and formatting data to train a world-class model. Our next article will be dedicated to this problem: stay tuned!

-

Adversarial attacks: With access to the model, an attacker can easily craft input data designed to deceive it. They introduce a subtle noise undetectable to the human eye into the input that will drastically alter the inference result. These are called adversarial examples. Examples include:

- Imagine a machine-learning-powered scanner that checks luggage for dangerous items at an airport. A knife could be forged in such a way that the system interprets it as a pencil.

- Harmless-looking stickers can be placed on traffic signs to fool an autonomous vehicle’s recognition system. A stop sign might then be interpreted as a soda can and ignored by the car, potentially resulting in a car crash.