Model Inversion Attacks in Machine Learning: Are Your AI Models at Risk?

Model inversion attacks pose a real threat to machine learning models trained on sensitive data — from industrial secrets to biometric information. This post explores what model inversion attacks are, who’s at risk, and how to protect your AI models against them.

This post answers:

- What is a model inversion attack?

- Who should be concerned?

- What are the real-world consequences?

- How can you prevent it?

Have You Ever Wondered If Your Training Data Could Be Extracted?

The threat of model inversion attacks is a concern across various industries, especially when dealing with confidential or personal data. This post shows how model inversion attacks work and explains their potential consequences.

What is a Model Inversion Attack?

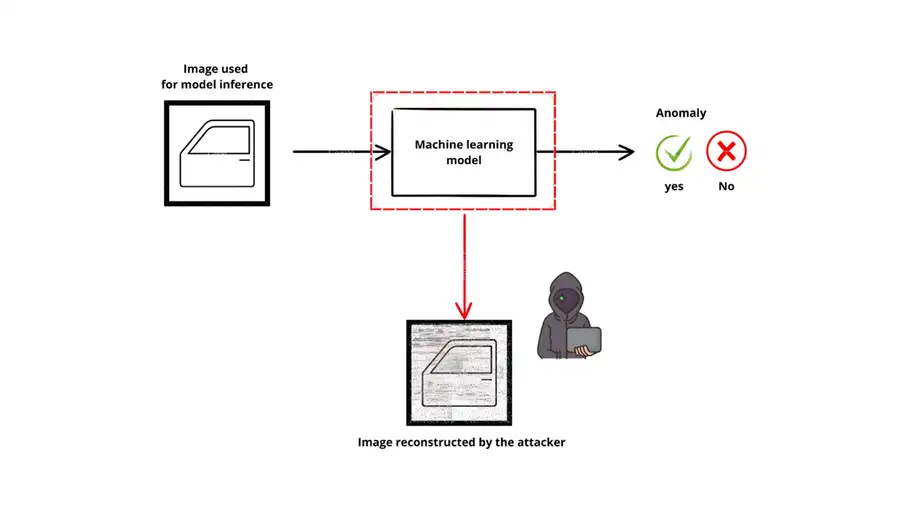

A model inversion attack is a type of machine learning threat where an attacker leverages the outputs of a trained model to reconstruct sensitive data — such as specific examples from the original training set.

In simpler terms, even without having direct access to the underlying dataset, an attacker can “invert” the model to recover private information it has learned during training, potentially exposing confidential or personal data.

Am I Concerned by Such Attacks?

Any AI model trained on sensitive data or industrial secrets is a potential target.

This includes sectors like:

- Healthcare — Reconstructing patient images or health records

- Biometrics — Recreating faces, fingerprints, or voices

- Industrial Systems — Revealing failure patterns, trade secrets, or proprietary processes

Example Scenarios:

- A model that predicts faults in industrial components (e.g., in aviation or automotive) could reveal engineering methods or design knowledge if attacked.

- A voice recognition model on a smartphone could be inverted to reconstruct a user’s voiceprint, enabling harmful deepfakes [1].

A Famous Example:

Research has shown that image recognition models can leak private data through inversion attacks. Attackers with access to a model’s API can generate realistic approximations of training images [2,3], enabling possible re-identification and posing a serious threat to privacy and security.

How to Protect Against Model Inversion Attacks

At Skyld, we developed a specialized development kit to protect on-device and on-premise machine learning models from extraction and reverse-engineering. This protection also guards against white-box inversion attacks, which are the most powerful and dangerous type.

References:

[1] Introducing Model Inversion Attacks on Automatic Speaker Recognition, Pizzi et al.

[2] Model Inversion Attacks that Exploit Confidence Information and Basic Countermeasures, Fredrikson et al.

[3] The Secret Revealer: Generative Model-Inversion Attacks Against Deep Neural Networks, Zhang et al.

Photo credit: © Claude AI