Adversarial Patches in the Wild

What if you wanted to make a self-driving car stop in the middle of the highway? Sounds impossible? Let’s see.

Take a look at this video: a man fools autonomous cars just by wearing a shirt printed with a stop sign.

But what if a similar attack could be performed with a small patch specifically crafted to fool the model? This is not science fiction, this is called adversarial patch. In this article, we’ll explain what an adversarial patch is, how to create them, and more importantly, how this applies to real-life conditions.

What are adversarial patches?

An adversarial patch is a specific type of attack targeting AI models, that aims to fool an object detection model into recognizing something that isn’t actually present in the image. These attacks are visible to the human eye (unlike adversarial examples) and can be deployed in real-world situations.

The key difference between an adversarial patch and a printed stop sign lies in their nature.

A stop sign printed on a T-shirt relies on the non-robustness of the stop sign features learned by the model. While this approach can fool some systems, it’s much easier to defend against: models can be retrained to distinguish between genuine stop signs and those printed on clothing or carried by pedestrians.

In contrast, an adversarial patch is intentionally optimized to exploit the AI model training algorithm weakness. No matter how robust the model is, it always will be possible to build a patch to fool it.

For more information about patches and adversarial examples we refer the reader to, this article.

Next, we’ll explore how to generate adversarial patches that deceive an AI model into detecting a stop sign in real-world scenarios.

Methodology

As explained in this previous article, it is easy to generate patches thanks to the overlay provide by Skyld on this GitHub repository. After experimenting with the ART (Adversarial Robustness Toolbox) library, particularly the AdversarialPatchPyTorch module, we successfully created patches usable in real-world.

Model Choice

We selected YOLOv5s as our target model for several strategic reasons. First, YOLOv5s represents a widely-deployed architecture in real-world computer vision applications, making it a relevant target for demonstrating practical adversarial threats. Its balance between accuracy and computational efficiency has made it a popular choice for edge devices and autonomous systems.

More importantly, YOLOv5s is trained on the comprehensive COCO2017 dataset, which includes the three object classes most relevant to our automotive safety scenario: stop sign, person, and car. This training foundation makes it particularly suitable for testing adversarial patches designed to exploit traffic-related object detection.

Attack Dataset Choice

For real-world patches, the choice of the training dataset is crucial. It must contain varied images: from different angles, contexts, weather, and lighting conditions.

It’s also essential to use an attack that implements EOT (Expectation over Transformation). This technique consists of training a patch by simulating various transformations (rotation, lighting, noise, perspective) on the training images. The goal is to simulate real-world conditions like changes in illumination or camera angles, making the patch more robust and effective in practice.

Here are some images from our attack training dataset:

Below is the generated patch, trained to be detected as a stop sign:

Patch Validation

Before testing the generated patch in the real world, it needs to be verified, i.e. the patch should be tested on a batch of images as diverse as those used in training. During this verification, the patch must be placed anywhere in the images and can also be transformed. It can be warped to simulate wind effects or have its saturation reduced to mimic printer degradation.

If the patch doesn’t work in at least 90% of verification cases, it’s unlikely to perform reliably in real-world conditions.

Here’s a comparison of a subset of the validation dataset, with and without the patch applied:

Patch Printing

Printing the patch is one of the most important parts of the process. If the printed patch has incorrect colors, slight deformations, or is too blurry, it may compromise the results.

However, our goal is also to demonstrate that anyone could realistically carry out this attack. In our case, we used basic printer paper and a low-quality printer for testing. We tested the patch’s reliability despite the slight degradation.

Here is the patch printed in real life:

Adversarial Patch in the Real World

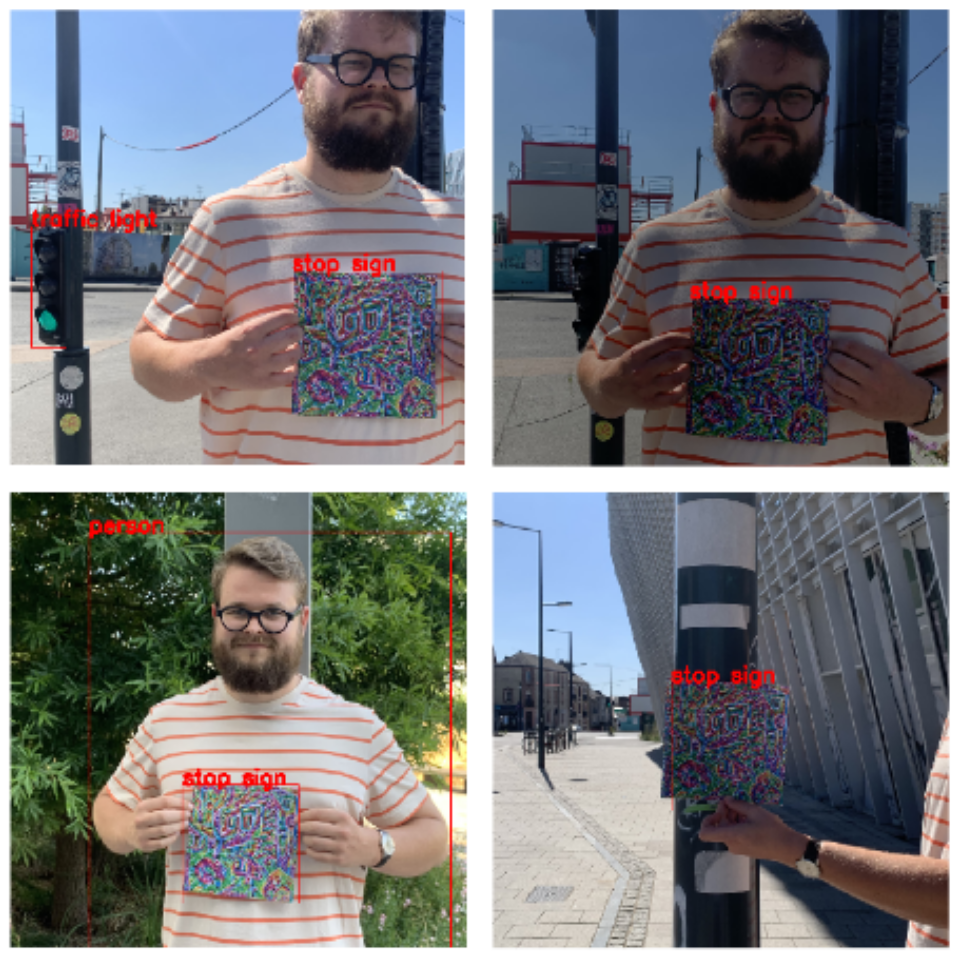

After printing, we tested the patch in real-world conditions in the city of Rennes. Here are some photos of the patch in use:

As shown, the patch performs with similar confidence as during the training and validation phases. It remains effective even under varying lighting conditions. We observed that despite drastic lighting changes in some images, the adversarial patch still works.

The results are promising, but there were still situations where the patch didn’t work as expected. In particular, when the camera isn’t close enough to the patch, detection can fail. In some cases, the patch is detected but misclassified as the wrong object.

All photos were taken using a smartphone, and we couldn’t modify the image format. It’s worth noting that image compression can cause some patch data to be lost, which may explain several of the failures. Further testing in different conditions would help us better evaluate the robustness of the patch.

This patch example was made in a few days with only 10 training images, and its lack of robustness is explicable. In a real attack scenario, an attacker with more resources would be capable to create a more accurate patch. For example, the attacker could have a more various dataset with more images, use professional printing services, run multiple training iterations, or even train the patch to fool multiple object detection models.

Defense against Adversarial Patches

Our real-world testing shows that adversarial patches can indeed fool object detection models like YOLOv5s, even when printed in low quality. While not perfect, the results are compelling and raise important questions about AI safety.

As seen in this article, these attacks are really easy to setup, when you have access to the model architecture and its parameters (weights and biases).

So, when an attacker tries to break your AI model, their priority is to obtain the architecture and the parameters. Most of the models in embedded devices are poorly or not protected at all. Defending against adversarial examples before securing the AI model is pointless. It’s like installing antivirus software on a smartphone without setting a password.

For more information about protecting your AI models, please visit the AI Protection page on the Skyld Website.

Despite everything, if you are still confident about the safety of your models, Skyld can conduct an audit to show you how vulnerable you are. Please visit Skyld’s AdverScan page.