Have you ever wondered if your machine learning models are vulnerable to attacks that can extract sensitive information, revealing the secrets embedded in their algorithms? The threat of model inversion attacks is a pressing concern across various industries, especially when dealing with confidential data. In this article, we’ll explore the insidious nature of model inversion attacks and delve into their potential consequences. Are your machine learning models truly secure, or could malicious actors exploit them to uncover sensitive information? Let’s find out.

What is a model inversion attack?

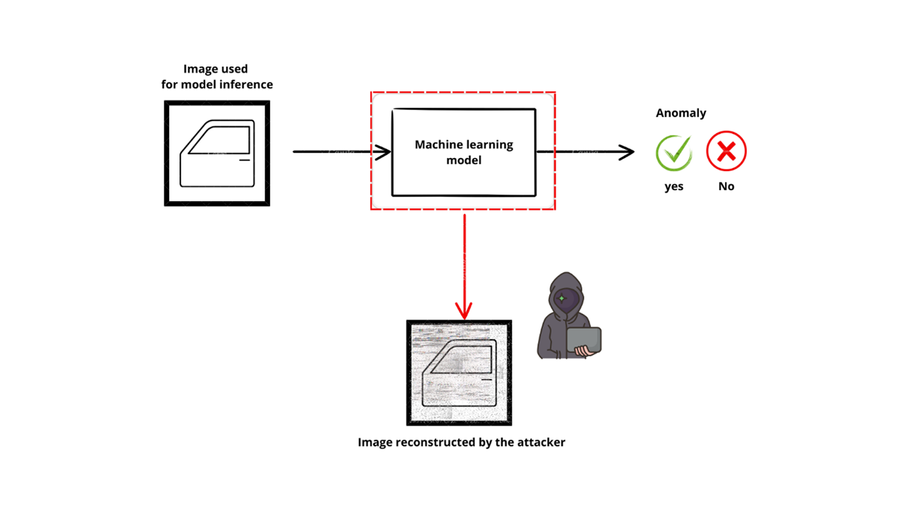

It is a type of machine learning attack where an attacker aim to extract sensitive information from the model’s output, such as instances of the training set.

Am I concerned by such attacks?

Various use cases are concerned by the threat of model inversion attacks, particularly when the training data contains sensitive information or industrial secrets.

Consider a scenario where a machine learning model predicts faults in industrial components for sectors such as aviation or automotive. After stealing the model, a competitor could execute a model inversion attack, revealing industrial secrets.

Similarly, you can imagine a machine learning model deployed on your phone that identifies your voice from audio. By crafting a model inversion attack an attacker could recreate your voice, potentially leading to the creation of harmful deepfakes [1].

A famous example:

In the case of an image recognition model, studies reveal that an attacker, armed with access to the machine learning model, can craft meaningful representations of individuals [2,3]. Consequently, these representations allow for the reidentification of individuals, posing a serious threat to privacy and security. Figure 1 illustrates an attack, showcasing the successful extraction of an individual’s representation by the attacker.

Preventing model inversion attacks with Skyld:

At Skyld, we developed a specialized development kit to protect machine learning models from attackers. Our kit seamlessly integrates into your existing development pipelines, minimizing disruptions. Additionally, with our advanced technology, your AI models will be safeguarded against even the most sophisticated attacks. Our solution is designed to meet the strictest security standards, ensuring compliance with industry regulations.

[1] Introducing Model Inversion Attacks on Automatic Speaker Recognition, Pizzi et al.

[2] Model Inversion Attacks that Exploit Confidence Information and Basic Countermeasures, Fredrikson et al.

[3] The secret revealer: Generative Model-Inversion Attacks Against Deep Neural Networks, Zhang et al.

0 commentaire